uname

uname -a

uname -v

uname --help

cat /etc/issue.net

cat /etc/redhat-release

lsb_release -a ==> I prefer this command

vnetlib -- uninstall vmx86

vnetlib -- install vmx86

System.getProperty("java.vm.name"). For me, it gives Java HotSpot(TM) 64-Bit Server VM on HotSpot and Oracle JRockit(R) on JRockit, although different versions/platforms may give slightly different results, so the most reliable method may be:String jvmName = System.getProperty("java.vm.name");

boolean isHotSpot = jvmName.toUpperCase().indexOf("HOTSPOT") != -1;

boolean isJRockit = jvmName.toUpperCase().indexOf("JROCKIT") != -1;java.vendor has the problem that the same vendor may produce multiple JVMs; indeed, since Oracle's acquisitions of BEA and Sun, both HotSpot and JRockit now report the same value: Oracle Corporationjava -version, is that the JVM can be installed on a machine yet not be present in the path. For Windows, scanning the registry could be a better approach. from numpy import *

# y = mx + b

# m is slope, b is y-intercept

def compute_error_for_line_given_points(b, m, points):

totalError = 0

for i in range(0, len(points)):

x = points[i, 0]

y = points[i, 1]

totalError += (y - (m * x + b)) ** 2

return totalError / float(len(points))

def step_gradient(b_current, m_current, points, learningRate):

b_gradient = 0

m_gradient = 0

N = float(len(points))

for i in range(0, len(points)):

x = points[i, 0]

y = points[i, 1]

b_gradient += -(2/N) * (y - ((m_current * x) + b_current))

m_gradient += -(2/N) * x * (y - ((m_current * x) + b_current))

new_b = b_current - (learningRate * b_gradient)

new_m = m_current - (learningRate * m_gradient)

return [new_b, new_m]

def gradient_descent_runner(points, starting_b, starting_m, learning_rate, num_iterations):

b = starting_b

m = starting_m

for i in range(num_iterations):

b, m = step_gradient(b, m, array(points), learning_rate)

return [b, m]

def run():

points = genfromtxt("data.csv", delimiter=",")

learning_rate = 0.0001

initial_b = 0 # initial y-intercept guess

initial_m = 0 # initial slope guess

num_iterations = 1000000

print "Starting gradient descent at b = {0}, m = {1}, error = {2}".format(initial_b, initial_m, compute_error_for_line_given_points(initial_b, initial_m, points))

print "Running..."

[b, m] = gradient_descent_runner(points, initial_b, initial_m, learning_rate, num_iterations)

print "After {0} iterations b = {1}, m = {2}, error = {3}".format(num_iterations, b, m, compute_error_for_line_given_points(b, m, points))

if __name__ == '__main__':

run()

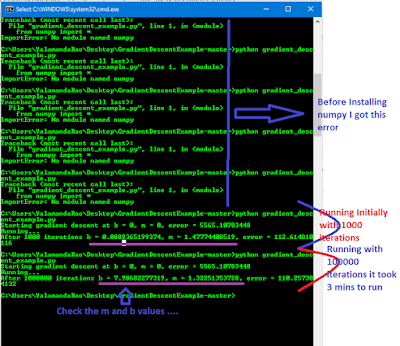

1: go to this website to download correct package: http://sourceforge.net/projects/numpy/files/

2: unzip the package

3: go to the unzipped folder

4: open CMD here (path of the unzipped foolder)

5: then use this command to install numpy: " python setup.py install "

1: python gradient_descent_example.py

|

| Gradient Descent, Linear Regression and running the algorithm using python |

|

| Installing Numpy |

[1]

PADMANABHASWAMY KSHETRAM

The temple that houses a sleeping idol of Lord Vishnu is the richest temple in the world. Treasure worth Rs 100,000 crore ($ 20,000,000,000) or 20 billiion dollars was recently found in secret chambers on temple land. Golden crowns, 17 kg of gold coins, 18 ft long golden necklace weighing 2.5 kg, gold ropes, sack full of diamonds, thousands of pieces of antique jewellery, and golden vessels were some of the treasures unearthed during the weekend.

Rs. 1 Crore =

Rs. 1,00,00,000 =

Rs. 10,000,000 =

Rs. 10 Million =

$ 200,000 (At very appx. Rs. 50 = $1)